ChatGPT, the Artificial Intelligence (AI) chatbot from the US company OpenAI, already has more than 100 million users. Microsoft Office Copilot is available to a worldwide user base of 1.5 billion for an additional charge. Therefore, around 20% of the world’s population has low-threshold access to AI tools. With such magnitudes, can we speak of the beginning of an AI hype or has it already peaked? Is AI at all a good business, and if so, for whom? What is AI actually being used for?

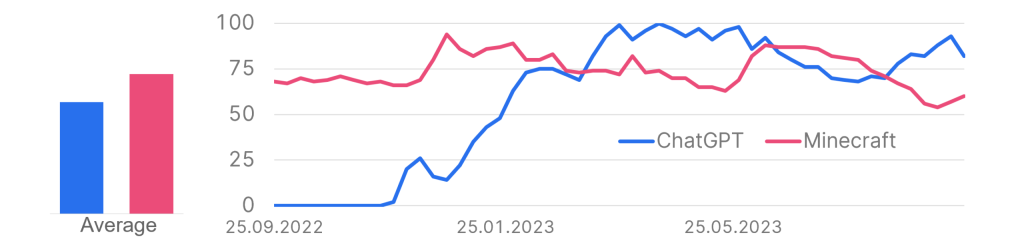

For the last question, Google search statistics provide clues. The graph shows the search frequency of “ChatGPT” (blue) and the video game “Minecraft” (red), which is popular among children. Unsurprisingly, searches for ChatGPT have exploded since the end of 2022. More interesting, however, is the drop in interest between June and September, while at the same time interest in Minecraft has increased. One hypothesis suggests that ChatGPT is largely used by students who prefer to play video games during the summer break.

Google search statistics for the terms„ChatGPT“ and „Minecraft“

Development over the past year, As of 26.09.2023

Source: Google Trends; Note: Past performance is not a reliable indicator for future performance.

Beyond writing homework, many companies are currently struggling to come up with AI use cases. It often turns out that many AI tools that are initially perceived as groundbreaking innovations end up being just a gimmick. Companies also encounter internal resistance when, for example, AI is used to record meetings and store what is said in summary form. An unfavorable or misunderstood remark during a heated discussion can thus end up in the company archive forever.

The two major use cases of the current AI generation remain the rapid composition of professional-sounding texts and the generation of functional computer code. As a result, AI acts as a force multiplier, especially in the tech sector. While machine learning plays a role in the pharmaceutical industry and materials research, the models used there have little to do with the models behind the currently popular chatbots and image generation tools.

AI – a shaky business model

With more than 100 million registered users, OpenAI, the industry leader, originally expected revenues of more than USD 200 million in 2023. In the meantime, however, sales of USD 1 billion are expected for the next twelve months. A good deal? Not by a long shot, because according to industry experts, every dollar of revenue is offset by running costs of an estimated 1.13 USD, and these do not even include the costs for the further development of the models. For GPT-4, currently the best-performing model, the development costs amounted to more than 100 million USD. For the next iteration, GPT-5, costs are expected to be much higher.1

In contrast to the internet revolution of the 2000s, with which the current AI hype is often compared, AI tools require much more expensive infrastructure. For example, a search query submitted by a user in the classic browser window costs Google a fraction of a cent in infrastructure and energy costs. With ChatGPT, the cost calculation is much more complicated. The costs here are based on the length of the question and answer. Example:

My question to GPT-4 is “What is the best retail bank in Austria?” This question consists of eight words plus a punctuation mark, together nine so-called tokens, which serve as input for the model. In addition, the model receives a system prompt for each query that is invisible to the user. This prompt controls the tonality of the answer and can read something like this: “You are a helpful AI tool. Your name is ChatGPT. You answer questions concisely and accurately. Your tone is polite and open.” – 53 more tokens.

The answer provides a list of Austrian banks (Erste Bank is of course mentioned in the first place) with a short description. In total, the response text consists of 230 tokens, which puts the total number of tokens for the request at about 300. With an estimated cost per token of USD 0.000178, the request for OpenAI costs about USD 0.05. This compares to user list prices of about USD 0.02 for such a request – so Open AI loses about USD 0.03 on this prompt. We’re not even talking about the massively used free version here.2

Since the costs scale with the request volume, the industry will sooner or later have to take the following three paths:

- Price increases – after the market has been divided between the dominant players, prices for AI services can be successively increased.

- Advertising – the responses of chatbots could be enriched with advertising texts in the future. However, this is technically much more complex than simple search queries and could undermine the credibility of the service.

- Bundling – for a surcharge of 30 euros, Microsoft Office users will be able to use AI functions in the future. The goal is to sell the service to large customers with thousands of users, knowing that in this context the utilization of the service will remain rather low.

The unclear business proposition of AI chatbots also explains why industry giants like Amazon, Google, and Meta are so hesitant to venture into the field, even though they would have the most capable researchers and developers paired with the best hardware available under their roof. As before, the advertising business remains the engine of the Internet giants and this can only marginally profit from the current AI trends.

GPU-rich vs. GPU-poor

As mentioned earlier, training AI models is a very expensive endeavor. OpenAI, despite its first-mover advantage, also had to slip under Microsoft’s large capital mantle. Over the next few years, Microsoft will successively invest $10 billion on top of the $2 billion it has already invested.

The essential point here is that OpenAI will thus be exclusively tied to the Microsoft Azure cloud infrastructure and presumably half of the investment sum will be settled in the form of compute credits, i.e. computing resources. One could compare the situation to a small pop-up store that sells company shares to a wholesaler and is allowed to use empty parts of the wholesaler’s warehouse in return.

When the OpenAI founder claims to have “achieved AGI internally”, i.e. to have created an AI that possesses generalized intelligence and can thus be used even in areas that lie outside the training data, that should make you think. OpenAI is flirting with an IPO, which at a $90 billion valuation (Mercedes-Benz has $74 billion) would raise enough capital to train GPT-5. The AGI myth comes in handy to distract from the less rosy profit situation.

In addition, the most powerful GPU chips, which are necessary for the calculation of AI models, are sold out and only available for customers with long-term supply contracts. The price for the top model is around 25,000 EUR, and at least 200 of them are needed to train a new model. In short, only the tech giants are currently able to provide large computing capacities. There are no European providers, which will not inevitably mean European companies lagging behind in the AI field, since access to the providers’ resources should be regulated according to the market principle, at least on paper.

But even the big American cloud providers are ultimately on the long leash of the undisputed chip manufacturer Nvidia and its producer TSMC. Nvidia owes its monopoly position to a long-standing strategy that is now bearing abundant fruit. For example, Nvidia developed the CUDA programming interface as early as 2007, which opened up an optimal use of the in-house GPUs for AI applications. Competitor AMD only published a comparable solution with ROCm in 2016. At that time, most developers in the AI field had already specialized with CUDA.

The production of the high-performance chips with 4nm architecture is currently handled exclusively by TSMC in its plants in Taiwan. Lured by subsidies, TSMC has recently attracted attention with groundbreakings for new plants in the US, Europe and India. However, only lower-quality chips will be produced in these new plants for the time being. This means that Taiwan will continue to be the pulse generator for the AI industry for the next 5-10 years. Geopolitical tensions in this region would thus have a direct influence on the future prospects of AI.

AI 2030 – a cautious outlook

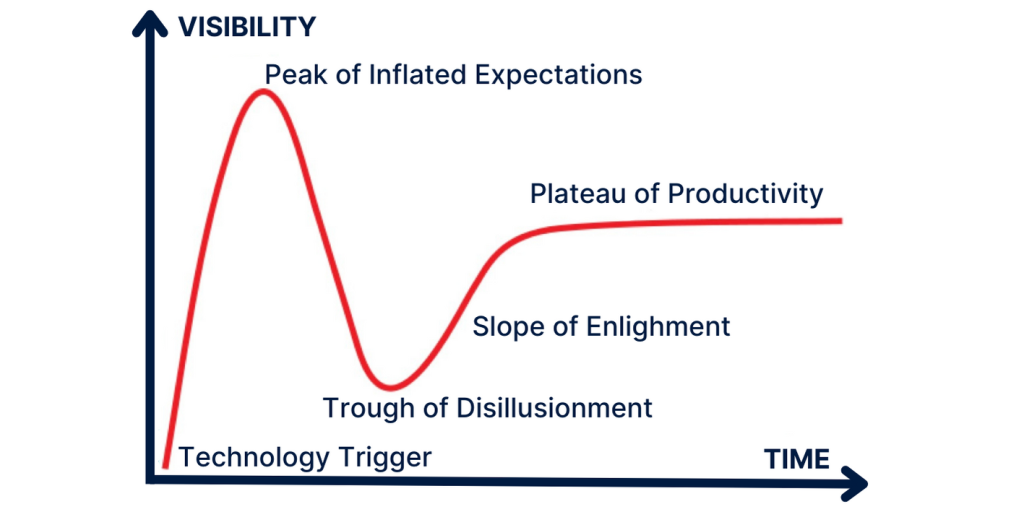

If we look at the monthly user accesses to the ChatGPT website, the peak of euphoria seems to have been passed in May 2023 with 1.9 billion visitors. In August, the number of users had already “shrunk” to only 1.6 billion. According to the hype cycle model developed by Gartner Research for new technologies, the “peak of inflated expectations” is followed by the “trough of disillusionment,” where many of the lofty promises turn out to be pipe dreams and many users turn away from the technology. At the end of the hype cycle is the “plateau of productivity” – the phase in which a new technology can exploit its full potential.

I am skeptical that this phase will be reached by 2030. Although many companies are currently pledging major investments in AI or have supposedly defined hundreds of use cases, on the one hand there are not enough resources to implement them, and on the other hand the lofty announcements are often just lip service intended to cover up problems elsewhere. A downturn in the global economy would presumably take the wind out of the sails of such announcements.

In addition, it must be put into perspective that the Transformer architecture popularized by ChatGPT was already developed by Google in 2016.3 Even in the AI sector, groundbreaking developments take years to reach market maturity and, as explained above, it has not been proven that today’s AI chatbots even represent a viable business model.

The semiconductor industry will have a decisive influence on the future development of AI. With the 4nm manufacturing process, a physical limit has been reached, since the wavelength of the laser beams that carve the semiconductors out of the silicon wafer is greater than the semiconductors themselves. In the future, the energy efficiency of the chips will play a greater role. This could lead to Nvidia being caught up by its competitors, whose focus has been very much on this topic in recent years.

Furthermore, the variety of chips used will increase. GPUs (graphics processing units), commonly referred to as graphics cards, were originally designed to display 3D environments – a capability that even the most highly specialized models still possess. Their utility for training complex algorithms was originally only a side benefit. The TPUs (tensor processing units) Google is pushing, on the other hand, have shed the graphics atavism and are designed solely for AI applications. On the horizon, the long shadow of quantum computing is also building. Here, industry experts anticipate an explosion of innovation in the late 2020s, when QPUs (quantum processing units) will catch up with classical chips. After that, global computing power could increase exponentially.

Chatbots will still be popular in 2030. Two categories are likely to emerge. Free chatbots based on open source architectures that include advertising features. High-performance chatbots that sit at the top of enterprise databases will dominate the business space. These will also tap into companies’ existing computing resources, allowing for better cost management. There will also be a labeling requirement for AI-generated text and images.

In my opinion, the next quantum leap will happen in the area of so-called reinforcement learning. In contrast to chatbots, which provide the most plausible answers based on word statistics but have no understanding of what is being said, reinforcement learning is about learning concrete chains of action from real-world problems. Chess computers are a classic example. In the future, it may be possible for AI algorithms to both understand and learn to improve arbitrary real-world processes. Coupled with advancing robotization, this could realize the dream of machines interacting autonomously with the real world.

- 32 Detailed ChatGPT Statistics — Users, Revenue and Trends (demandsage.com) ↩︎

- Prompt Economics: Understanding ChatGPT Costs | One AI ↩︎

- [1706.03762] Attention Is All You Need (arxiv.org) ↩︎

For a glossary of technical terms, please visit this link: Fund Glossary | Erste Asset Management

Legal note:

Prognoses are no reliable indicator for future performance.

Legal disclaimer

This document is an advertisement. Unless indicated otherwise, source: Erste Asset Management GmbH. The language of communication of the sales offices is German and the languages of communication of the Management Company also include English.

The prospectus for UCITS funds (including any amendments) is prepared and published in accordance with the provisions of the InvFG 2011 as amended. Information for Investors pursuant to § 21 AIFMG is prepared for the alternative investment funds (AIF) administered by Erste Asset Management GmbH pursuant to the provisions of the AIFMG in conjunction with the InvFG 2011.

The currently valid versions of the prospectus, the Information for Investors pursuant to § 21 AIFMG, and the key information document can be found on the website www.erste-am.com under “Mandatory publications” and can be obtained free of charge by interested investors at the offices of the Management Company and at the offices of the depositary bank. The exact date of the most recent publication of the prospectus, the languages in which the fund prospectus or the Information for Investors pursuant to Art 21 AIFMG and the key information document are available, and any other locations where the documents can be obtained are indicated on the website www.erste-am.com. A summary of the investor rights is available in German and English on the website www.erste-am.com/investor-rights and can also be obtained from the Management Company.

The Management Company can decide to suspend the provisions it has taken for the sale of unit certificates in other countries in accordance with the regulatory requirements.

Note: You are about to purchase a product that may be difficult to understand. We recommend that you read the indicated fund documents before making an investment decision. In addition to the locations listed above, you can obtain these documents free of charge at the offices of the referring Sparkassen bank and the offices of Erste Bank der oesterreichischen Sparkassen AG. You can also access these documents electronically at www.erste-am.com.

Our analyses and conclusions are general in nature and do not take into account the individual characteristics of our investors in terms of earnings, taxation, experience and knowledge, investment objective, financial position, capacity for loss, and risk tolerance. Past performance is not a reliable indicator of the future performance of a fund.

Please note: Investments in securities entail risks in addition to the opportunities presented here. The value of units and their earnings can rise and fall. Changes in exchange rates can also have a positive or negative effect on the value of an investment. For this reason, you may receive less than your originally invested amount when you redeem your units. Persons who are interested in purchasing units in investment funds are advised to read the current fund prospectus(es) and the Information for Investors pursuant to § 21 AIFMG, especially the risk notices they contain, before making an investment decision. If the fund currency is different than the investor’s home currency, changes in the relevant exchange rate can positively or negatively influence the value of the investment and the amount of the costs associated with the fund in the home currency.

We are not permitted to directly or indirectly offer, sell, transfer, or deliver this financial product to natural or legal persons whose place of residence or domicile is located in a country where this is legally prohibited. In this case, we may not provide any product information, either.

Please consult the corresponding information in the fund prospectus and the Information for Investors pursuant to § 21 AIFMG for restrictions on the sale of the fund to American or Russian citizens.

It is expressly noted that this communication does not provide any investment recommendations, but only expresses our current market assessment. Thus, this communication is not a substitute for investment advice.

This document does not represent a sales activity of the Management Company and therefore may not be construed as an offer for the purchase or sale of financial or investment instruments.

Erste Asset Management GmbH is affiliated with the Erste Bank and austrian Sparkassen banks.

Please also read the “Information about us and our securities services” published by your bank.